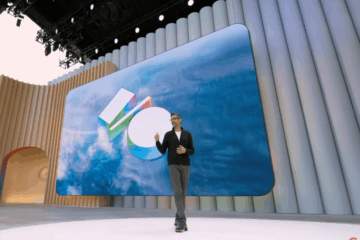

Since its original launch at Google I/O 2024, Project Astra has become a testing ground for Google’s AI assistant ambitions. The multimodal, all-seeing bot is not a consumer product, really, and it won’t soon be available to anyone outside of a small group of testers. What Astra represents instead is a collection of Google’s biggest, wildest, most ambitious dreams about what AI might be able to do for people in the future. Greg Wayne, a research director at Google DeepMind, says he sees Astra as “kind of the concept car of a universal AI assistant.”

Eventually, the stuff that works in Astra ships to Gemini and other apps. Already that has included some of the team’s work on voice output, memory, and some basic computer-use features. As those features go mainstream, the Astra team finds something new to work on.

This year, at its I/O developer conference, Google announced some new Astra features that signal how the company has come to view its assistant – and just how smart it thinks that assistant can be. In addition to answering questions, and using your phone’s camera to remember where you left your glasses, Astra can now accomplish tasks on your behalf. And it can do it with …