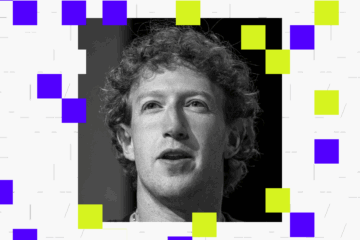

Enlarge (credit: Aurich Lawson | Getty Images)

We’re in phase three of our machine-learning project now—that is, we’ve gotten past denial and anger, and we’re now sliding into bargaining and depression. I’ve been tasked with using Ars Technica’s trove of data from five years of headline tests, which pair two ideas against each other in an “A/B” test to let readers determine which one to use for an article. The goal is to try to build a machine-learning algorithm that can predict the success of any given headline. And as of my last check-in, it was… not going according to plan.

I had also spent a few dollars on Amazon Web Services compute time to discover this. Experimentation can be a little pricey. (Hint: If you’re on a budget, don’t use the “AutoPilot” mode.)

We’d tried a few approaches to parsing our collection of 11,000 headlines from 5,500 headline tests—half winners, half losers. First, we had taken the whole corpus in comma-separated value form and tried a “Hail Mary” (or, as I see it in retrospect, a “Leeroy Jenkins“) with the Autopilot tool in AWS’ SageMaker Studio. This came back with an accuracy result in validation of 53 percent. This turns out to be not that bad, in retrospect, because when I used a model specifically built for natural-language processing—AWS’ BlazingText—the result was 49 percent accuracy, or even worse than a coin-toss. (If much of this sounds like nonsense, by the way, I recommend revisiting Part 2, where I go over these tools in much more detail.)